SeaBee documentation

This website provides technical documentation for various aspects of the SeaBee workflow.

To learn more about the SeaBee project itself, please visit https://seabee.no/. To explore SeaBee data products, use the SeaBee GeoNode.

1 Overview

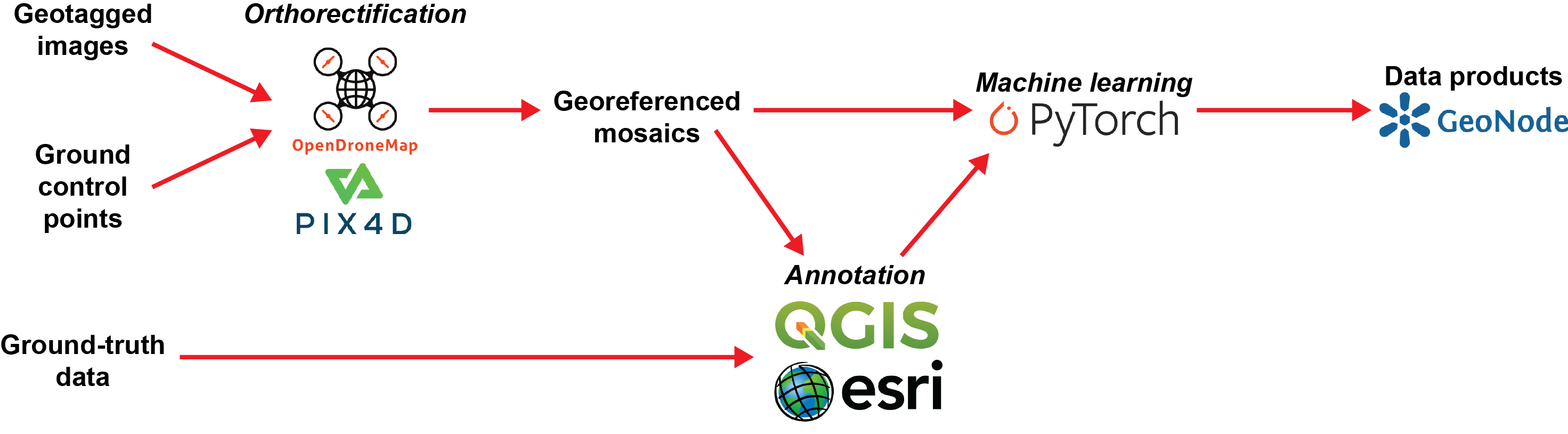

A simplified version of the SeaBee data flow is illustrated in Figure 1. Geotagged images from aerial drones are combined with ground control points using orthorectification software to produce georeferenced image mosaics.

Ground-truth data collected in the field are used to aid annotation of the mosaics to create training datasets for machine learning algorithms. These algorithms are applied to generate data products, which are made available via the SeaBee GeoNode.

SeaBee aerial drones are flown with both RGB and multispectral cameras. In addition, the project includes hyperspectral data as well as imagery from other types of drone, such as the Otter (an unmanned surface vehicle).

Typical data products from the machine learning include both image segmentation (e.g. habitat mapping) and object identification (e.g. seabird or mammal counts).

2 Sigma2

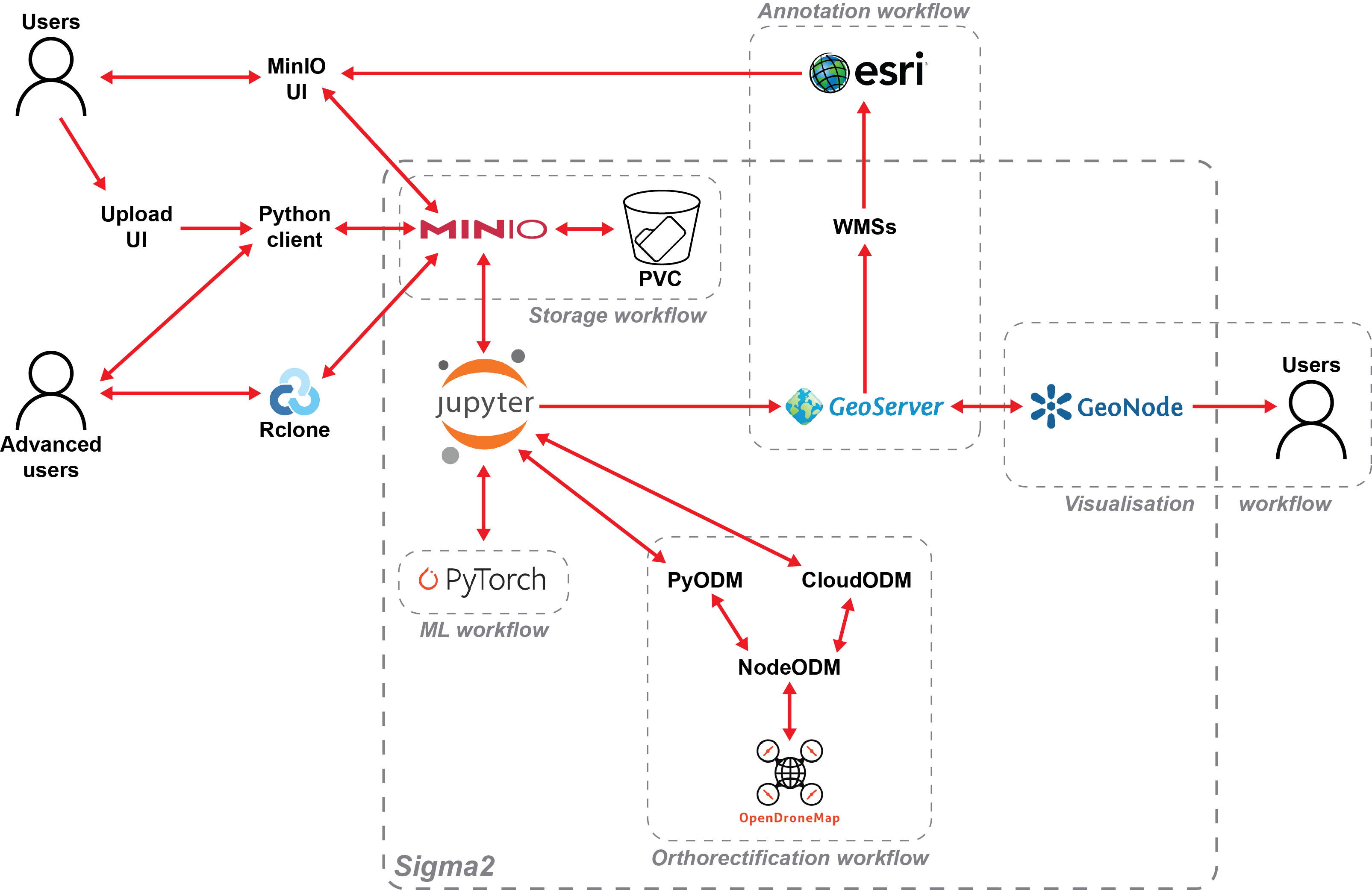

Data handling for the SeaBee project is hosted by Sigma2 on NIRD, the National Infrastructure for Research Data. All SeaBee datasets are stored in the Sigma2 data centre at Lefdal Mine in Nordfjord, one of the greenest data centres in Europe. The SeaBee project has its own Kubernetes namespace at Lefdal Mine. A high-level schematic of the current configuration is shown in Figure 2.

SeaBee uses MinIO running on Sigma2 to provide S3-compatible file storage for all SeaBee datasets. Users with MinIO accounts can upload and download data using any S3-compliant software (e.g. Rclone), as well as various web interfaces. On the platform itself, orthorectification is performed using Open Drone Map and NodeODM, while machine learning is implemented using PyTorch.

Finished datasets are uploaded to GeoServer and then published to the SeaBee GeoNode, where users can search, explore and combine SeaBee data products.